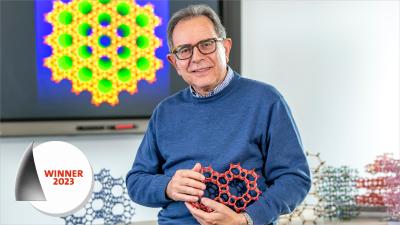

European Inventor Award

About the award

People with a passion for discovery drive innovation. Without their inquisitive minds and desire for new ideas, there would be no inventive spirit and no progress. The European Inventor Award pays tribute to inventors worldwide. It celebrates those who transform their ideas into technological progress, economic growth or improvements to our daily lives. Launched in 2006, the Award gives inventors the recognition they deserve and, like any good competition, it acts as an incentive for others.

The finalists 2023

Search for past finalists

The world’s brightest ideas and sharpest minds all in one place. Use the filters to search by year, category, field, country or name

Young Inventors Prize

Aimed at innovators aged 30 and under, this prize rewards those using technology to contribute to the United Nation's Sustainable Development Goals. A granted patent is not required, and all finalists receive a cash prize.

Popular Prize

Unlike the traditional Award categories and Young Inventors prize, the Popular Prize is decided by a public vote. In 2023, Patricia de Rango, Daniel Fruchart, Albin Chaise, Michel Jehan, Nataliya Skryabina claimed the trophy for their work in a safe and sustainable way to store hydrogen.

Contact

European Inventor Award and Young Inventors Prize queries:

european-inventor@epo.org Subscribe to the European Inventor Award newsletterMedia-related queries:

Contact our Press team#InventorAward #YoungInventors